\n Sample Automaton\n

\n

\n\n

This article is intended to help you bootstrap your ability to work with Finite State Automata (note automata == plural of automaton). Automata are a unique data structure, requiring a bit of theory to process and understand. Hopefully whats below can give you a foundation for playing with these fun and useful Lucene data structures!

\n\n

Motivation, Why Automata?

\n\n

When working in search, a big part of the job is making sense of loosely-structured text. For example, suppose we have a list of about 1000 valid first names and 100,000 last names. Before ingesting data into a search application, we need to extract first and last names from free-form text.

\n\n

Unfortunately the data sometimes has full names in the format “LastName, FirstName” like “Turnbull, Doug”. In other places, however, full names are listed “FirstName LastName” like “Doug Turnbull”. Add a few extra representations, and to make sense out of what strings represent valid names becomes a chore.

\n\n

This becomes especially troublesome when were depending on these as natural identifiers for looking up or joining across multiple data sets. Each data set might textually represent the natural identifier in subtly different ways. We want to capture the representations across multiple data sets to ensure our join works properly.

\n\n

So… Whats a text jockey to do when faced with such annoying inconsistencies?

\n\n

You might initially think “regular expression”. Sadly, a normal regular expression cant help in this case. Just trying to write a regular expression that allows a controlled vocabulary of 100k valid last names but nothing else is non-trivial. Not to mention the task of actually using such a regular expression.

\n\n

But there is one tool that looks promising for solving this problem. Lucene 4.0s new Automaton API. Lets explore what this API has to offer by first reminding ourselves about a bit of CS theory.

\n\n

Theory – Finite State Automata and You!

\n\n

Lucenes Automaton API provides a way to create and use Finite State Automata(FSAs). For those who dont remember their senior level computer science, FSA is a computational model whereby input symbols are read by the computer – the automaton – to drive a state machine. The state machine is simply a graph with nodes and labeled, directed edges. Each node in the graph is a state, and each edge is a transition labeled with a potential input symbol. By matching the current nodes edges to the current input symbol, the automaton follows edges to the next state. The next input symbol is read, and based on the transitions of the new state, we transition to yet another state, so-on and so-forth.

\n\n

More importantly here, FSAs are a way of specifying a Regular Language. If we think of all the input symbols as elements of a language, we can use an FSA to specify a language and determine if a given input string is valid for the language. We do this by processing the input string, following the transitions of an FSA until we reach a terminus node. If we exhaust the input symbols at such a node, then the string of symbols can be said to match the language. Otherwise, we can say the input string does not match the language.

\n\n

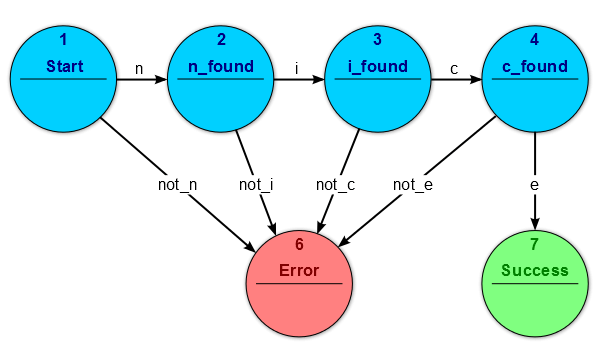

So for example, in the figure below. The string “nice” that is the sequence of symbols “n”, “i”, “c”, “e” are accepted as a member of the language. All other strings are rejected:

\n\n

\n\n

\n\n

In Practice – An Automaton as a data structure

\n\n

In practice, Lucene automata are useful as as a data structure that bridges between a traditional Set and hand-written regular expression. When compared to a HashSet or TreeSet the memory representation (can be) much, much smaller, with very fast lookups. Moreover, Automata give you fuzzier features like regular expression matching.

\n\n

Its not a general purpose set replacement, however. For an automaton, the set is all the strings of symbols that match the language. However, due to all the fuzzy matching potentially using “regular expressions” enumerating all the input strings that match the language, that is enumerating the members of the set, is non-trivial and might never terminate. Think about it this way, traversal involves manually traversing a graph, so every node, whether a terminus or not, must be visited. This traversal might never terminate because you could have a * regular expression. So enumerating the strings that match the language will involve infinitely repeating the pattern before the *.

\n\n

Another factor to consider is that while lookup time and memory usage are much, much smaller when compared to a Set, indexing can be very time consuming. For the use case of creating an automaton up front to specify a relatively static language once this isnt a big deal. But for data structures that change frequently, automatons shouldnt be the first choice.

\n\n

When compared to a regular expression, the Automaton lends itself to being able to hold more complex regular languages than the ones you could specify in a traditional regular expression. For example, it would be difficult to specify a regular expression that had 500,000 potential first names followed by 1,000 last names unioned with 1,000 last names, a comma, followed by one of 500,000 first names. Thats Perl thats not even write only. Automatons give you the ability to effectively write an extremely rich and large a “regular expression” in readable code in a way that wont make generating/parsing such a regular expression a giant chore.

\n\n

Next, lets see how we actually build a useful automaton

\n\n

Teh Codez – Building an Automaton

\n\n

\n (Note all code below can be found at this github repo)\n

\n\n

To show off the API, lets take the example we discussed at the outset. Lets say our language allows two forms of full names “FirstName LastName” and “LastName, FirstName”. For simplicity, lets validate only a set of names. First Names: {"Doug", "John"} and last names {"Berryman", "Turnbull"}. So in our world all the valid names are “Doug Berryman”, “Doug Turnbull”, “John Berryman”, and “John Turnbull”. Forms where last name comes first followed by a comma, followed by the first name (ie “Turnbull, Doug”) are also considered valid.

\n\n

So how do we use the Lucene API to build at Automaton to validate this syntax?

\n\n

There are two key classes that youll use over-and-over for building meaningful automata. First the BasicAutomata class provides static methods for constructing automata out of simple building blocks (in our case individual strings). Second the BasicOperations class provides utility methods for combining Automata into unions, intersections, or concatenations of other automata.

\n\n

Outside of these two central classes, we can also fold in additional automata from other classes. For example, regular expressions via the RegExp class.

\n\n

Ok, now lets actually start putting together some code. Lets first look at the form “FirstName LastName”. We want to specify a language that takes any of our first names {“John”, “Doug”}, followed by some number of whitespace characters, then followed by any of our last names {“Turnbull”, “Berryman”}.

\n\n

Our first piece of code forms the foundation for all of our other automata. One of the basic automata we need to build is simply one built from a set of valid strings. For example, an automaton for last names that says “Turnbull” is valid, “Berryman” is valid, but “Pugh” is not.

\n\n

As this task is going to be a pretty common, occurrence, well be using this utility function that builds an automaton for accepting only the values from the passed in String array:

\n\n

/**\n * @param strs\n * All the strings that we want to allow in the returned language\n * @return\n * An automaton that allows only the passed in strings\n */\npublic static Automaton stringUnionAutomaton(String[] strs) {\n Automaton strUnion = null;\n // Simply loop through the strings and place them in the automaton\n for (String str: strs) {\n // Basic building block, make an automaton that accepts a singl\n // string\n Automaton currStrAutomaton = BasicAutomata.makeString(str);\n if (strUnion == null) {\n strUnion = currStrAutomaton;\n }\n else {\n // Combine the current string with the Automata for the\n // previous string, saying that this new string is also valid\n strUnion = BasicOperations.union(strUnion, currStrAutomaton);\n }\n }\n return strUnion;\n}\n\n\n

Notice how on every iteration, we create an automaton for the current string. The first iteration initializes the resulting automaton(strUnion) to the current strings automata (currStrAutomaton'). On subsequent iterations, we set the resultingstrUnionto the union ofcurrStrAutomatonand itself. Finally returningstrUnion` as the union of all the strings passed in.

\n\n

With this building block, we can build up a more complex Automaton for our FirstName LastName form:

\n\n

/**\n * @param firstNames\n * Set of allowable first names\n * @param lastNames\n * Set of allowable last names\n * @return\n * An automaton that allows FirstName\s+LastName\n */\npublic static Automaton createFirstBeforeLastAutomaton(String[] firstNames, String[] lastNames) {\n List allAutomatons = new ArrayList();\n // Use our builder to create an automaton that allows all the first names\n allAutomatons.add(stringUnionAutomaton(firstNames));\n\n // Add in an Automaton that allows any whitespace by using\n // the regular expression\n RegExp anyNumberOfSpaces = new RegExp("[ \t]+");\n Automaton anySpaces = anyNumberOfSpaces.toAutomaton();\n allAutomatons.add(anySpaces);\n\n // Add in an Automaton that allows all our last names\n allAutomatons.add(stringUnionAutomaton(lastNames));\n\n // Return the concatenation off all these automatons\n return BasicOperations.concatenate(allAutomatons);\n}\n \n\n

In this code, were building three automata and returning the concatenation of all three. So to be a member of the concatenated automatons language, if a string passes the first automaton, then with additional characters passes the second automaton, and finally as characters are exhausted finishes the last automaton, well consider this a valid member of this language.

\n\n

Note the use of the RegExp class. This class supports basic regular-expression matching and allows us to match one-or-more tabs or spaces in the input.

\n\n

The LastName, FirstName form is similar:

\n\n

public static Automaton createLastBeforeFirstAutomaton(String[] firstNames, String[] lastNames) {\n List allAutomatons = new ArrayList();\n allAutomatons.add(stringUnionAutomaton(lastNames));\n\n RegExp commaPlusAnyNumberOfSpaces = new RegExp(",[ \t]+");\n allAutomatons.add(commaPlusAnyNumberOfSpaces.toAutomaton());\n allAutomatons.add(stringUnionAutomaton(firstNames));\n return BasicOperations.concatenate(allAutomatons);\n}\n \n\n

The only difference here is were validating last names before first and our regex separator now has a comma. Otherwise, this is very similar to the other form.

\n\n

To finish things off, we simply have to create an automata that takes the union of both forms, allowing strings of either language to be valid in the resulting language:

\n\n

public static Automaton createNameValidator(String[] firstNames,\n String[] lastNames) {\n return BasicOperations.union(createFirstBeforeLastAutomaton(firstNames, lastNames),\n createLastBeforeFirstAutomaton(firstNames, lastNames));\n}\n\n\n

Woohoo! You should be ready to go! Just keep in mind one or two things when building more complex automata:

\n\n

Automaton Creation Considerations

\n\n

One of the things that makes the Automaton special is the potential minimal in-memory representation of the data structure. This gives you powerful lookup capabilities against a complex language with a large vocabulary, but noticeably increases index time when compared to a traditional data structure.

\n\n

To ensure the minimal representation of an Automaton, its important to note that Lucene may not be always keeping the most optimal representation of the data structure in memory. Without minimizing, you could have problems with lookup speed and will certainly have problems exhausting the jvm heap.

\n\n

For example, if we said that “Ed” and “Eddy” are valid strings in our language, we might initially have something like:

\n\n

[]---E--->[]---d---[*]\n \\n ---E--->[]---d---[]---d---[]---y---[*]\n\n\n

Add this up over time, and we end up with a horrendous looking graph that leaves you wondering why anyone would ever bother using Automata!

\n\n

Part of the secret sauce to Lucenes Automatons is minimizations. Instead of representing the graph as a gnarly web of duplicated gobbly, gook, we can perform the minimization operation on the graph above to be simply:

\n\n

[]---E--->[]---d---[*]\n \\n d---[]---y---[*]\n\n\n

By periodically calling Automata.minimize you can reduce the memory footprint of your automaton.

\n\n

You can track roughly how big your automaton is getting with Automata.getNumberOfStates() and Automata.getNumberOfTransitions. Its generally a good idea to keep an eye on the size of your automaton and deal with any bloat that occurs during indexing.

\n\n

Hack away!

\n\n

I hope youve enjoyed this tutorial, hack away and let me know what you think! I hope to follow up with more information on how to more efficiently create and store automata and delve into their sister library in Lucene – Finite State Transducers!

\n\n